The following is a selection of projects in which I have contributed an essential part or have been in the leadership. I would like to thank all collaborators who have contributed to the success of the respective projects. Especially Ernst Kruijff (BRSU, Germany), George Ghinea (Brunel, UK), André Hinkenjann (BRSU, Germany), Kiyoshi Kiyokawa (NAIST, Japan), Wolfgang Stürzlinger (SFU, Canada), Bernhard Riecke (SFU, Canada), Hrvoje Benko (Facebook Reality Labs, US) and David Eibich (BRSU, Germany).

Haptics for Virtual Reality

Within this project we explore a novel haptic display based on a robot arm attached to a head-mounted virtual reality display. It provides localized, multi-directional and movable haptic cues in the form of wind, warmth, moving and single-point touch events and water spray to dedicated parts of the face not covered by the head-mounted display.

Robot Augmentations

This project follows an approach of how haptic feedback on the feet can increase spatial perception in remote environments. For this propose a dedicated prototype was developed. For this propose a dedicated prototype was developed, that maps proximity and collision to the users feet. More information soon...

Mobile Device Haptics

The project is part of my doctoral thesis in which I explore the augmentation of visual technologies. Especially within this project different prototypes were implemented to improve performance and perception.

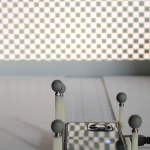

Mobile Projection Mapping

Within this project we introduced a novel zooming interface deploying a pico projector that, instead of a second visual display, leverages audioscapes for contextual information. The technique enhances current flashlight metaphor approaches, supporting flexible usage within the domain of spatial augmented reality to focus on object or environment-related details.

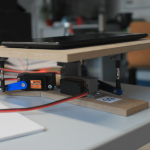

Surface Haptics

Within this project we developed an novel system combining a dynamically adjustable flexible surface with a rigid tablet display. The system allows for mostly unexplored ways of tactile and haptic feedback for pen-based interaction.

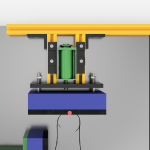

Multisensory Evaluation Platform

The goal of this project was the design and implementation of a multisensory development and experimental platform. The current platform supports wind, smell and sound, but can be extended as needed.

View management – augmented reality

Multisensory interaction for augmented reality. Research and development of a novel augmented reality system with visual, auditory and tactile stimuli.

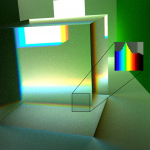

Olive – light simulation

Physically based illumination for optimizing location based target spectra. Intelligent control of spectrally measured lighting in real time (Human-Centric Lighting).

PlaSMoNa – Indoor Navigation

This project aimed to connect users of social networks in a targeted, location- and event-related way. PlaSMoNa, therefore, focused on indoor navigation methods on android smartphones.

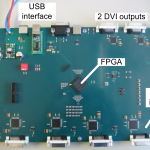

Pixelstrom – Parallel Rendering

The goal of this project was to reduce network traffic and latency to

increase performance in parallel visualization systems. Initial data

distribution is based on a standard ethernet network, whereas image

combining and returning differs from traditional parallel rendering

methods. Calculated sub-images are grabbed directly from the DVI-Ports

for fast image compositing by a FPGA-based combiner.

InnovaDesk – cost-efficient responsive workbench

In this project we developed a cost-efficient responsive workbench for modeling, interaction, multi-touch and demonstrators for arbitrary end-users. The software part we are currently developing is based on our VE framework basho, which supports different rendering algorithms and a lot of different interaction/input devices.